人工智能让我们变“笨”了吗?

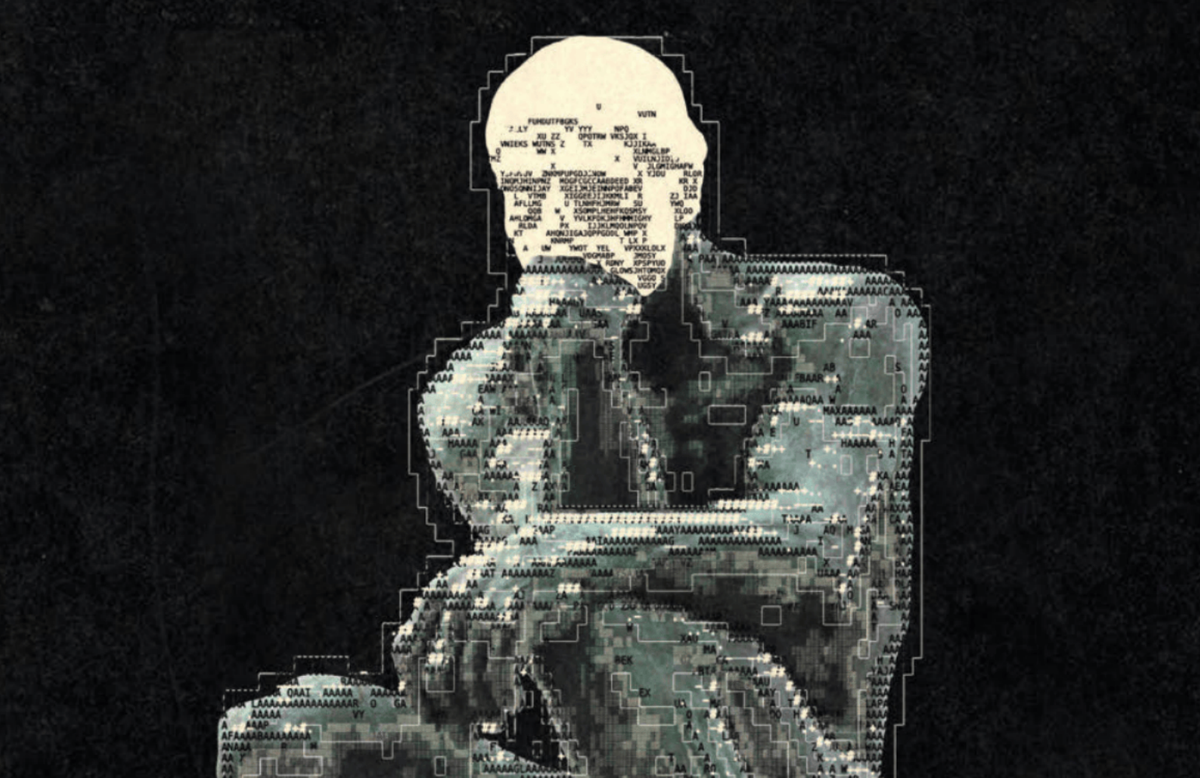

多项研究表明,使用生成式人工智能(AI)可能损害创造力与批判性思维,导致相关脑区活动降低及思维测试得分下降 。核心担忧在于,AI促使了复杂思维的“认知卸载” ,可能导致大脑陷入“认知吝啬”的恶性循环,削弱独立思考能力 。其长期的认知成本与社会效益仍有待评估 。

近期多项研究表明,深度依赖ChatGPT等生成式人工智能(generative AI)工具可能会在无形中削弱我们大脑的创造性与批判性思维能力。麻省理工学院(Massachusetts Institute of Technology, MIT)的一项最新研究发现,在使用AI辅助写作的学生中,其大脑中与创造性功能和注意力相关的区域,神经活动显著降低。这一发现为日益增多的关于AI对人类认知能力潜在负面影响的研究增添了新的证据,并引发了一个深刻的问题:我们在享受AI带来的短期效率提升时,是否正在累积一笔隐性的长期认知“债务”?

多项研究敲响警钟

MIT的研究人员通过脑电图(electroencephalograms, EEGs)技术,实时监测了学生在撰写论文时的大脑活动。结果显示,无论是脑力投入还是注意力集中程度,使用AI辅助的学生均显著低于独立完成的学生。更有趣的是,这些借助了AI的学生,在事后被要求准确引用自己刚刚写出的论文内容时,表现得更为困难。

这一结论与另外两项重要研究的发现不谋而合。微软研究院(Microsoft Research)对319名每周至少使用一次生成式AI的知识工作者进行了调查,发现在他们借助AI完成的900多项任务中,超过40%的任务被他们自己认定为几乎无需动脑的“无意识”工作。大多数受访者承认,与不使用AI时相比,他们完成任务所需的认知努力“更少”或“少得多”。

另一项由瑞士SBS商学院(SBS Swiss Business School)的迈克尔·格里希(Michael Gerlich)教授进行的研究则更为直接。他对666名英国参与者进行了测试,发现越是频繁使用AI的人,在标准化批判性思维评估中的得分越低。格里希教授表示,研究发表后,数百名高校教师与他联系,称这“精确地描述了他们当下在学生身上观察到的现象”。

“认知卸载”的代价

当然,技术帮助人类分担脑力劳动并非新鲜事。早在公元前5世纪,哲学家苏格拉底(Socrates)就曾抱怨文字并非“记忆的灵药,只是提醒的工具”。计算器让收银员免于心算之苦,导航应用则让我们无需再费力研读地图。然而,很少有人会因此认为人类的整体能力下降了。

加拿大滑铁卢大学(University of Waterloo)的心理学教授埃文·里斯科(Evan Risko)创造了“认知卸载(cognitive offloading)”一词,用以描述人类将繁重或乏味的脑力任务交由外部工具处理的倾向。他认为,几乎没有证据表明,让机器代劳会改变大脑固有的思考能力。

真正的担忧在于,如里斯科教授所言,生成式AI允许我们“卸载一套远为复杂的处理过程”。卸载简单的算术与卸载写作、问题解决这类复杂的思维过程,不可同日而语。一旦大脑习惯了这种高级“卸载”,便可能陷入一种被称为“认知吝啬(cognitive miserliness)”的恶性循环:越是依赖AI,批判性思维能力就越弱,从而导致大脑变得更加“吝啬”,进一步加剧对AI的依赖。一位重度AI用户在格里希教授的研究中感叹:“我太依赖AI了,以至于我觉得没有它,我都不知道该如何解决某些问题了。”

如何让大脑保持“健康”?

这种潜在的认知能力衰退,长远来看可能损害企业乃至社会的竞争力。美国东北大学(Northeastern University)管理学教授芭芭拉·拉尔森(Barbara Larson)指出,长期的批判性思维能力衰退“很可能导致竞争力下降”。多伦多大学(University of Toronto)的一项研究也表明,过度接触AI生成的想法,可能会让人的创造力变得更低、想法更趋同。

为了避免AI让我们变得“懒惰”,研究者们提出了一些策略。拉尔森教授建议,我们应将AI的角色限定为“一个热情但有些天真的助手”。格里希教授则推荐,我们不应直接向AI索要最终答案,而应引导它一步步地辅助我们寻找解决方案。例如,与其问“我该去哪里度过一个阳光明媚的假期?”,不如先问“哪些地方降雨最少?”,然后基于此进行下一步的探索。

此外,一些研究团队正在尝试开发能主动向用户提出“挑衅性”问题或探究性问题的“思考助手”式AI,以激发用户进行更深层次的思考。但这些方法在实践中也面临挑战,例如可能会降低工作效率,甚至引起用户的反感。微软的研究员扎娜·布辛卡(Zana Buçinca)指出,“人们不喜欢被强迫思考”。

尽管AI技术尚处早期,但在许多任务上,人类大脑仍是最敏锐的工具。未来,我们需要持续评估其广泛的益处是否会超过潜在的认知成本。而一个更根本的问题是,如果未来有更强的证据表明AI确实在让人类变得不那么聪明,我们会在意吗?【全文完】

原文分析

首先,我们需要深入理解这篇报道的内涵。这篇文章的核心主题是探讨生成式人工智能(generative AI)在为我们带来便利的同时,是否可能对人类的创造性思维和批判性思维能力造成潜在的长期损害。文章并非简单地给出一个“是”或“否”的答案,而是通过整合多项最新研究,呈现了一个复杂且充满辩证关系的图景。为了让“川透社”的读者,特别是那些对人工智能技术和认知科学不太了解的读者能够深入理解,我们需要详细阐述其中涉及的几个关键背景知识。比如,文章提到的“生成式AI”,我们应解释它不仅是像ChatGPT这样的聊天工具,更是能自主创造文本、图像等内容的系统,这正是其影响创造力的核心所在。对于“认知卸载”(cognitive offloading)这一专业术语,我们不能只做字面翻译,而应像原文那样,用计算器、导航App等生活化实例来类比,再点明其与AI卸载复杂思维过程的本质区别,这样才能让读者 grasp the key point。

从文章的体裁和结构来看,它不属于即时性的硬新闻,而是一篇典型的分析性或解释性特写(analytical/explanatory feature)。文章的叙事结构逻辑性极强,层层递进。具体来说,文章的叙事结构层次分明,逻辑推进如下:

- 以案引题:文章开篇通过麻省理工学院(Massachusetts Institute of Technology, MIT)一项引人入胜的脑电图(electroencephalograms, EEGs)研究作为“钩子”,生动地抛出核心问题——使用AI会降低大脑特定区域的神经活动。

- 拓展论证:接着,文章引用了微软研究院和瑞士商学院的另外两项研究,从知识工作者的自我评估和普通民众的批判性思维测试两个维度,进一步佐证AI对认知能力影响的普遍性,从而将论点从单一案例扩展为普遍现象。

- 引入反方与历史视角:为了使论述更加全面,文章引入了历史类比(苏格拉底对文字的担忧、计算器的出现)和“认知卸载”理论,承认技术分担脑力劳动的普遍性,提出并非所有“卸载”都有害,避免了片面的技术批判。

- 深化核心风险:在辩证思考后,文章进一步聚焦于生成式AI的独特性和真正的风险所在,即它卸载的是“更复杂的思维过程”,并引出“认知吝啬(cognitive miserliness)”的反馈循环概念,深刻揭示了其潜在的长期危害。

- 提出并评估解决方案:文章随后转向建设性探讨,列举了多种应对策略(如将AI视为助手、分步提问、开发“思考助手”式AI等),并对这些方案的实用性和局限性进行了客观评估,展现了其严谨的分析态度。

- 总结与展望:最后,文章回归到一个更宏大的社会层面,指出消费者和监管者终将面临对AI的利弊权衡,并以一个开放性问题结尾,引发读者对未来社会认知模式的深思。

关于信源、偏向和立场,来源《经济学人》是全球知名的新闻周刊,以其深刻的分析和独特的古典自由主义(classical liberalism)立场著称,在经济议题上通常被视为中间偏右。然而在这篇科技伦理文章中,其政治光谱偏向并不明显。更值得注意的是它一贯的精英视角和理性、审慎的分析风格。文章没有渲染“AI威胁论”的恐慌,而是将此问题框架为一个关乎生产力与认知能力之间权衡的“成本-收益”分析,这种冷静、客观、基于证据的探讨方式正是其典型风格。从国际传播的角度看,这篇文章的议题和引用的研究(均来自北美和欧洲的顶尖机构) 都植根于西方发达国家的语境,其关注点是“知识工作者”(knowledge workers)面临的挑战。编译时,我们需要意识到这个背景,确保我们的读者明白这是在特定社会发展阶段下的前沿思考。

编译建议

基于以上分析,对于这篇信息密度高、逻辑严谨、篇幅较长的文章,我建议采用单篇新闻编译的方式,而不是简单的全译或节译。这样做的好处是,我们可以在保留原文核心论点和证据的基础上,主动地进行结构优化和内容解释,使其更符合“川透社”读者的阅读习惯和知识背景,从而达到更好的传播效果。

具体的编译步骤可以这样规划:

首先,我们需要对标题和导语进行再创作。原文标题“Artificial stupidity”(人工智能之愚)是一个非常精妙的双关语,直接翻译可能会损失其韵味或显得过于耸动。我们可以改写成一个更直接、更能引发思考的标题,例如:“人工智能让我们变‘笨’了吗?最新研究揭示其对创造性思维的潜在影响”。导语部分,不必拘泥于原文的叙事性开头,可以改写成一个摘要式导语,开门见山地概括全文的核心发现和主要矛盾:即多项研究表明,频繁使用AI工具可能降低大脑在创造和专注方面的活动,引发了对其长期影响的担忧。

其次,在主体部分的编译中,我们应适当重组信息,强化逻辑。例如,可以将原文中分散提及的MIT、微软研究院和瑞士商学院的三个研究案例整合在一起,作为阐述“AI的潜在风险”的集中证据板块,使论证更加集中有力。对于原文中引用的苏格拉底的例子或一些较为复杂的学术思辨,可以适当精简,将重点放在研究发现和实际影响上。

最关键的一点是,在编译过程中,必须履行“编译者”的增值功能。对于首次出现的机构,如“麻省理工学院(Massachusetts Institute of Technology, MIT)”,或专业术语,如“认知卸载(cognitive offloading)”,务必按照我们的编译规范,在译文后附上原文括号,并可视情况增加极其简短的解释性文字,帮助读者跨越理解障碍。这正是我们作为编译者,区别于简单翻译的专业性体现。

通过这样的编译处理,我们既能忠实地传达原文的精华,又能让稿件在“川透社”的平台上更好地被读者理解和吸收。

新闻原文

Artificial stupidity

How AI changes the way you think

Creativity and critical thinking seem to take a hit. But there are ways to soften the blow

AS ANYBODY WHO has ever taken a standardised test will know, racing to answer an expansive essay question in 20 minutes or less takes serious brain power. Having unfettered access to artificial intelligence (AI) would certainly lighten the mental load. But as a recent study by researchers at the Massachusetts Institute of Technology (MIT) suggests, that help may come at a cost.

Over the course of a series of essay-writing sessions, students working with (as well as without) ChatGPT were hooked up to electroencephalograms (EEGs) to measure their brain activity as they toiled. Across the board, the AI users exhibited markedly lower neural activity in parts of the brain associated with creative functions and attention. Students who wrote with the chatbot's help also found it much harder to provide an accurate quote from the paper that they had just produced.

The findings are part of a growing body of work on the potentially detrimental effects of AI use for creativity and learning. This research points to important questions about whether the impressive short-term gains afforded by generative AI may incur a hidden long-term debt.

The MIT study augments the findings of two other high-profile studies on the relationship between AI use and critical thinking. The first, by researchers at Microsoft Research, surveyed 319 knowledge workers who used generative Al at least once a week. The respondents described undertaking more than 900 tasks, from summarising lengthy documents to designing a marketing campaign, with the help of AI. According to participants' self-assessments, only 555 of these tasks required critical thinking, such as having to review an Al output closely before passing it to a client, or revising a prompt after the Al generated an inadequate result on the first go. The rest of the tasks were deemed essentially mindless. Overall, a majority of workers reported needing either less or much less cognitive effort to complete tasks with generative-Al tools such as ChatGPT, Google Gemini or Microsoft's own Copilot AI assistant, compared with doing those tasks without AI.

Another study, by Michael Gerlich, a professor at SBS Swiss Business School, asked 666 individuals in Britain how often they used AI and how much they trusted it, before posing them questions based on a widely used critical-thinking assessment. Participants who made more use of AI scored lower across the board. Dr Gerlich says that after the study was published he was contacted by hundreds of high-school and university teachers dealing with growing AI adoption among their students who, he says, "felt that it addresses exactly what they currently experience".

Whether AI will leave people's brains flabby and weak in the long term remains an open question. Researchers for all three studies have stressed that further work is needed to establish a definitive causal link between elevated AI use and weakened brains. In Dr Gerlich's study, for example, it is possible that people with greater critical-thinking prowess are just less likely to lean on AI. The MIT study, meanwhile, had a tiny sample size (54 participants in all) and focused on a single narrow task.

Moreover, generative-Al tools explicitly seek to lighten people's mental loads, as many other technologies do. As long ago as the 5th century BC, Socrates was quoted as grumbling that writing is not "a potion for remembering, but for reminding". Calculators spare cashiers from computing a bill. Navigation apps remove the need for map-reading. And yet few would argue that people are less capable as a result.

There is little evidence to suggest that allowing machines to do users' mental bidding alters the brain's inherent capacity for thinking, says Evan Risko, a professor of psychology at the University of Waterloo who, along with a colleague, Sam Gilbert, coined the term "cognitive offloading" to describe how people shrug off difficult or tedious mental tasks to external aids.

The worry is that, as Dr Risko puts it, generative Al allows one to "offload a much more complex set of processes". Offloading some mental arithmetic, which has only a narrow set of applications, is not the same as offloading a thought process like writing or problem-solving. And once the brain has developed a taste for offloading, it can be a hard habit to kick. The tendency to seek the least effortful way to solve a problem, known as "cognitive miserliness", could create what Dr Gerlich describes as a feedback loop. As AI-reliant individuals find it harder to think critically, their brains may become more miserly, which will lead to further offloading. One participant in Dr Gerlich's study, a heavy user of generative AI, lamented "I rely so much on AI that I don't think I'd know how to solve certain problems without it."

Many companies are looking forward to the possible productivity gains from greater adoption of AI. But there could be a sting in the tail. "Long-term critical-thinking decay would likely result in reduced competitiveness," says Barbara Larson, a professor of management at Northeastern University. Prolonged AI use could also make employees less creative. In a study at the University of Toronto, 460 participants were instructed to propose imaginative uses for a series of everyday objects, such as a car tyre or a pair of trousers. Those who had been exposed to ideas generated by AI tended to produce answers deemed less creative and diverse than a control group who worked unaided. When it came to the trousers, for instance, the chatbot proposed stuffing a pair with hay to make half of a scarecrow-in effect suggesting trousers be reused as trousers. An unaided participant, by contrast, proposed sticking nuts in the pockets to make a novelty bird feeder.

Get with the program

There are ways to keep the brain fit. Dr Larson suggests that the smartest way to get ahead with AI is to limit its role to that of "an enthusiastic but somewhat naive assistant". Dr Gerlich recommends that, rather than asking a chatbot to generate the final desired output, one should prompt it at each step on the path to the solution. Instead of asking it "Where should I go for a sunny holiday?", for instance, one could start by asking where it rains the least, and proceed from there.

Members of the Microsoft team have also been testing AI assistants that interrupt users with "provocations" to prompt deeper thought. In a similar vein, a team from Emory and Stanford Universities have proposed rewiring chatbots to serve as "thinking assistants" that ask users probing questions, rather than simply providing answers. One imagines that Socrates might heartily approve.

Such strategies might not be all that useful in practice, however, even in the unlikely event that model-builders tweaked their interfaces to make chatbots clunkier, or slower. They could even come at a cost. A study by Abilene Christian University in Texas found that Al assistants which repeatedly jumped in with provocations degraded the performance of weaker coders on a simple programming task.

Other potential measures to keep people's brains active are more straightforward, if also rather more bossy. Overeager users of generative AI could be required to come up with their own answer to a query, or simply wait a few minutes, before they're allowed to access the AI. Such "cognitive forcing" may lead users to perform better, according to Zana Buçinca, a researcher at Microsoft who studies these techniques, but will be less popular. "People do not like to be pushed to engage," she says. Demand for workarounds would therefore probably be high. In a demographically representative survey conducted in 16 countries by Oliver Wyman, a consultancy, 47% of respondents said they would use generative-AI tools even if their employer forbade it.

The technology is so young that, for many tasks, the human brain is still the sharpest tool in the toolkit. But in time both the consumers of AI and its regulators will have to assess whether its wider benefits outweigh any cognitive costs. If stronger evidence emerges that AI makes people less intelligent, will they care?